Unitree - Aliengo Basic Quadruped Robot

Aliengo is a robot dog that weighs 19 kg and is one size larger than his 12 kg brother A1 quadruped walking robot developed by Unitree. In addition, it is a medium-sized machine that is a little smaller than a large robot of 30 kg, such as the Boston Dynamics spot.

Equipped with advanced manoeuvrability and expandable machine vision functions, there are many rough terrains such as factories, plants, tunnels and piping facilities, construction sites, agricultural lands, and forests, that it can walk in. It is a robot dog that is expected to be used for patrol monitoring and image inspection at the site. It's not only a robot for industrial use but also a great platform for research fields and entertainment.

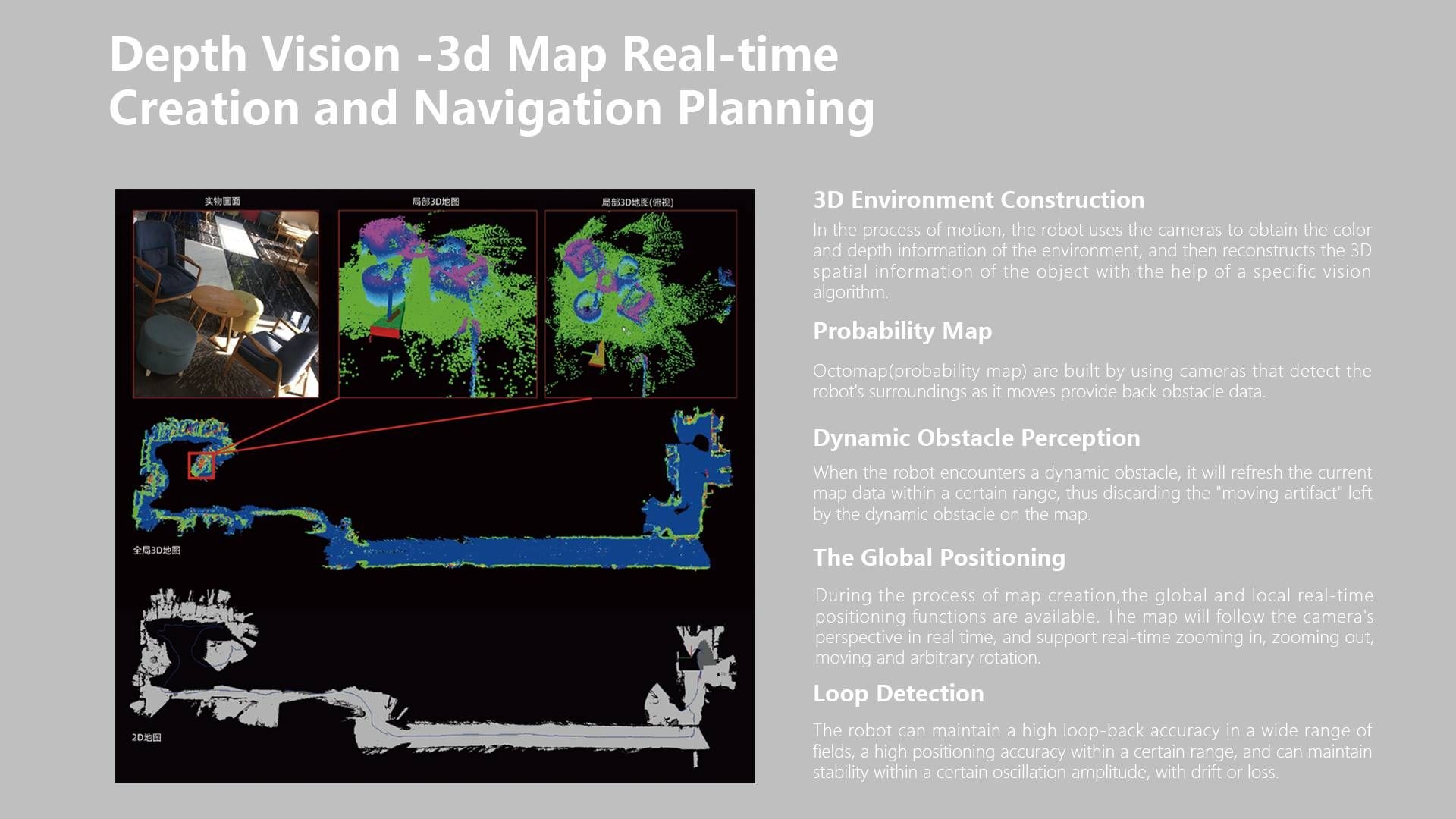

Depth perception vision system

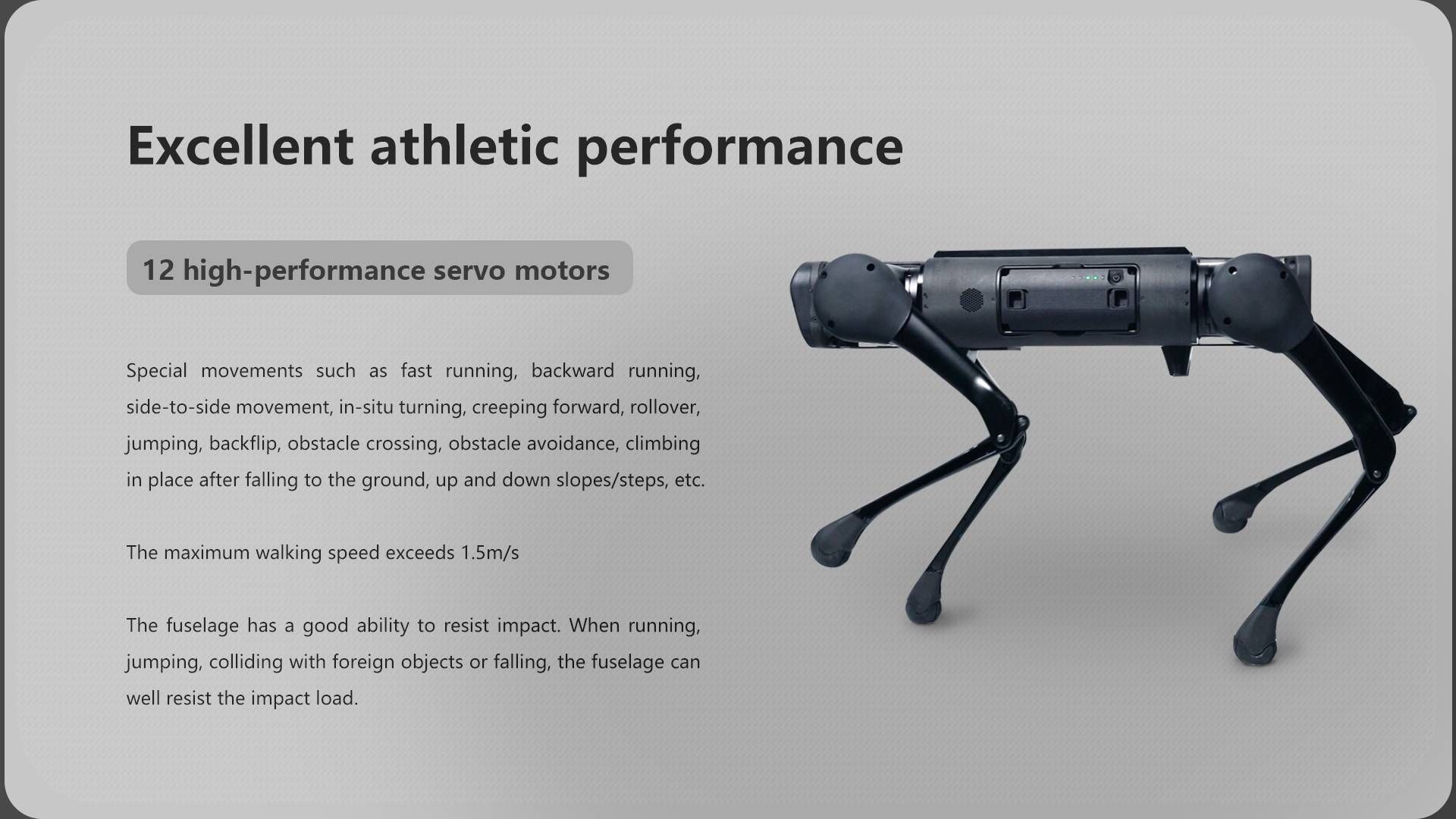

High explosive sports performance

Adaptive complex terrain

Long battery life & heavy load

Rich external interface

All-in-one body

Advanced protection level

Built-in intelligent air cooling system

Aliengo is a quadruped robot capable of reconnaissance and inspection tasks, especially on rough terrain as it has a great balance capacity. Thanks to this, he can perform complex maneuvers such as somersaults.

Its main features are:

Equipped with: |

|

|

Hardware interfaces: |

also provides external output voltage of 5V 12V 19V 24V, convenient for users to add external load equipment. |

also provides external output voltage of 5V 12V 19V 24V, convenient for users to add external load equipment. |

Supports: |

|

With the 2 Lidars, Aliengo supports 4 perception functions: |

Field Applications

R&D

Load transport

Inspection

Security

Entertainment

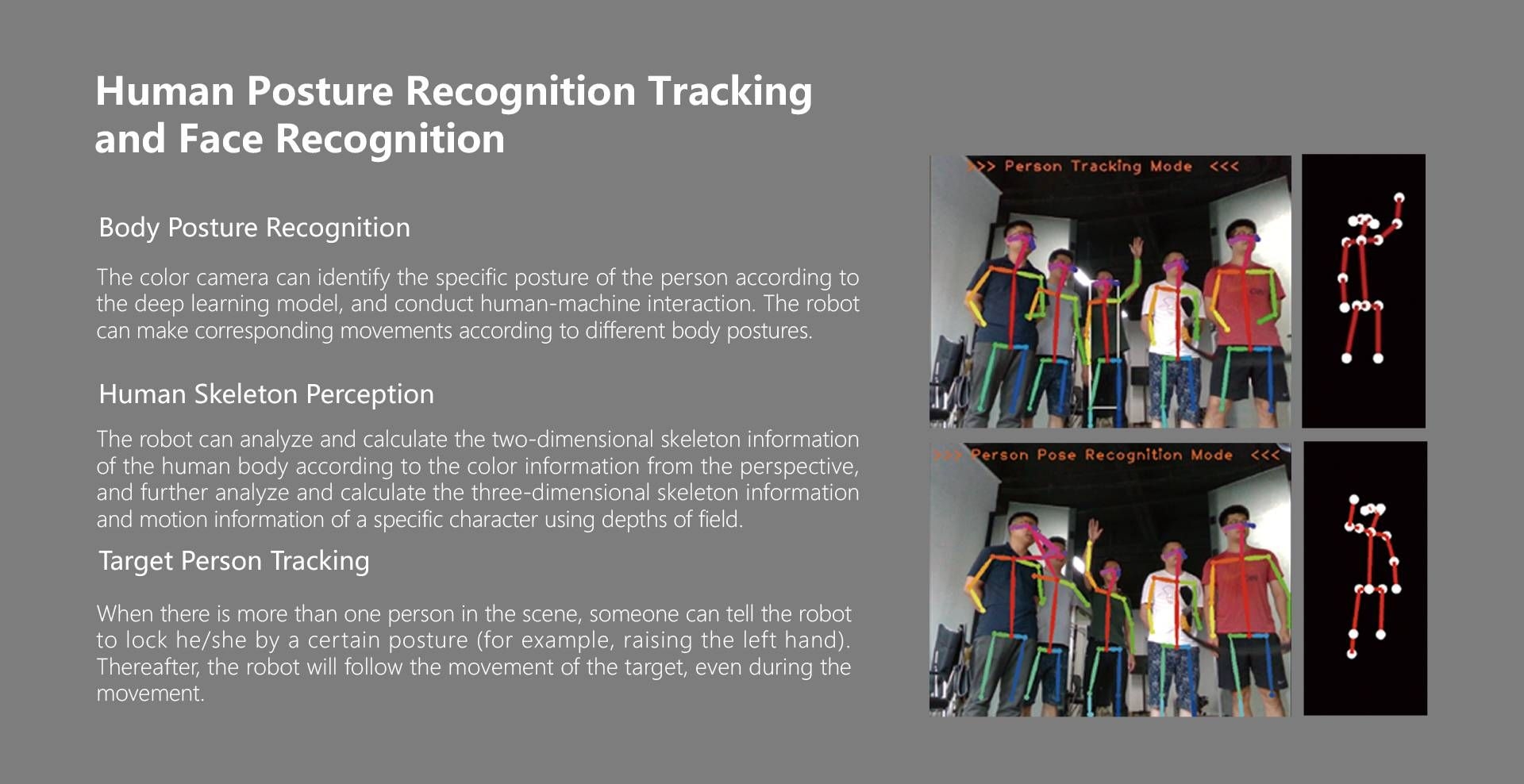

HUMAN POSTURE RECOGNITION TRACKING AND FACE RECOGNITION

1. BODY POSTURE RECOGNITION

The colour camera can identify the specific posture of the person according to the deep learning model, and conduct human-machine interaction. The robot can make corresponding movements according to different body postures.

2. HUMAN SKELETON PERCEPTION

The robot can analyze and calculate the two-dimensional skeleton information of the human body according to the colour information from the perspective, and further analyze and calculate the three-dimensional skeleton information and motion information of a specific character using depths of field.

3. TARGET PERSON TRACKING

When there is more than one person in the scene, someone can tell the robot to lock he/she by a certain posture (for example, raising the left hand). There- after, the robot will follow the movement of the target, even during the movement.

4. FACE RECOGNITION AND APPEARANCE DETERMINATION(UNDER DEVELOPMENT)

From the perspective of the robot, artificial intelligence algorithm is used to automatically conduct face recognition and crowd classification, and it can identify gender, age and outfits.

Videos

| Manufacturer | Unitree |

|---|